Hello Followfox Community!

I’m one of those people who are not great at writing prompts for AI image generators, crafting those meticulous, long strings of text that do the magic. I don’t mind tweaking them to adjust details, but coming up with fresh, long prompts is not my tea.

Since those longer, higher-quality prompts typically result in better images from Stable Diffusion models; I decided to summon the powers of LLMs and try to use GPT 3.5 as a prompt assistant.

The whole exploration was quite fun so that I will take you on that journey in this post. As usual, in our style, we share all the details, code, and thinking behind it. But this time, that is not all - we are adding results of this exploration to Distillery (our Discord image generation service) as /crazyprompts command, where you input a simple text prompt and it returns you a modified version.

I’m one of those people who are not great at writing prompts for AI image generators, crafting those meticulous, long strings of text that do the magic. I don’t mind tweaking them to adjust details, but coming up with fresh, long prompts is not my tea.

Since those longer, higher-quality prompts typically result in better images from Stable Diffusion models; I decided to summon the powers of LLMs and try to use GPT 3.5 as a prompt assistant.

The whole exploration was quite fun so that I will take you on that journey in this post. As usual, in our style, we share all the details, code, and thinking behind it. But this time, that is not all - we are adding results of this exploration to Distillery (our Discord image generation service) as /crazyprompts command, where you input a simple text prompt and it returns you a modified version.

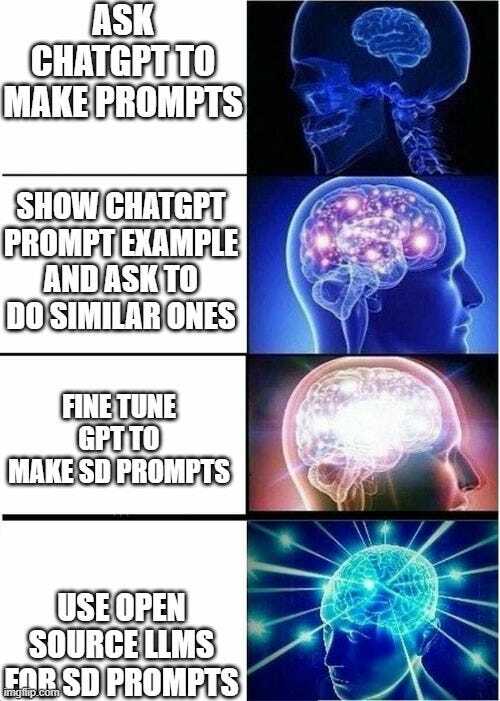

There is nothing new in using ChatGPT or LLMs more broadly to generate Stable Diffusion prompts. However, there are many options for how to do it, and not all approaches result in the same quality prompts. A quick summary of approaches looks something like this, and in this post, we will try the first three.

1 - Ask ChatGPT for a Prompt (zero-shot)

This is as simple as it gets: we go to ChatGPT (link) and ask the model to write a high-quality prompt. We are using the paid GPT-4 version.

In this case, I had a starting prompt idea, “vacuum cleaner transformer.” And here is what you get when using it directly as a Stable Diffusion prompt.

So, I asked ChatGPT to turn this prompt into a high-quality one. You can check the convo here (link), but here is the prompt we got and the resulting image of this prompt.

In a modern, well-lit room with beige walls and a hardwood floor, a transformer robot stands confidently. This transformer is unique: its constructed from parts of a sleek, futuristic vacuum cleaner. The main body of the transformer uses the vacuums cylindrical dust container, which gleams silver and transparent, allowing one to see the multi-colored dust particles trapped inside. Its arms are extended vacuum tubes, flexible and dark grey, with nozzle-like fingers. The legs are made from the main body of the vacuum, solid and powerful, with wheels retracted. Its head, rising above, has bright LED eyes, reminiscent of the vacuums power indicator lights. The overall color scheme of the transformer is metallic silver, with accents of blue and black. A subtle glow emanates from its joints, suggesting its powered on and ready for action

As you can see, the prompt structure is not the typical high-quality prompts for Stable Diffusion models. The image has improved a ton, but there is room for improvement.

2 - Show ChatGPT a prompt example and ask to make a similar one (one-shot)

ChatGPT can improve a ton when shown the example of the desired output type. There is one problem - I have to find a high-quality one that is relatively easily solvable. I found one on https://civitai.com/ and ‘showed’ it to ChatGPT. You can see the convo here (link), and here is the resulting prompt and image.

Masterpiece, utmost precision, intricate design. Picture an enchanting tableau inspired by 'Modern Techno-Baroque.' Visualize a transformer, its form primarily reminiscent of a vacuum cleaner, yet gracefully melded with hints of classic baroque artistry. Capture the balance between its utilitarian nature and aesthetic grandeur, with intricate carvings, gleaming surfaces, and bold contrasts. Radiate the majestic presence of a machine, yet hold subtle hints of antique elegance.

That didn’t go as planned at all! As you can see from the chat history, it follows the template structure too closely, forcing things that are not necessarily related to the idea. Subsequently, the images generated are not what we expect.

A lot can be adjusted and fixed here, though. We can find a better example prompt(s) and adjust the instruction to encourage more variance. I’d encourage you to experiment and share if you find something that works consistently well.

Fine-tuning GPT 3.5

For context, before this attempt, my only experience with GPT has been through the ChatGPT version, so treat this post as a story of that journey rather than a definitive guide. If you are on the same level, we highly recommend this video from Jeremy Howard about LLMs. It will give you a ton of useful context (link).

First of all, let’s define what we want to achieve after the fine-tuning is done. We would like to:

- Send short, poorly written prompts to our fine-tuned GPT model.

- Receive back a high-quality prompt that captures the essence of the initial prompt.

- (optional) Achieve some variability so we can get multiple takes on the same prompt.

That gives us a good sense of what we will need to do:

- Create a dataset with pairs of short, poor-quality prompts and corresponding high-quality ones

- Fine-tune GPT 3.5 with that dataset

- Test and hopefully enjoy the results.

Creating Fine-tuning Dataset

We have three main tasks here, so let’s discuss each of them in detail:

Getting high-quality prompts, creating lower-quality versions of those prompts, and combining everything into a GPT fine-tuning format.

High-Quality Prompts Dataset

There are many options here, and we chose a very specific approach that can be revisited in the future. We decided to use Midjourney style prompts as our models are fine-tuned with a lot of data (images and prompts) from Midjourney. So, it felt like a natural evolution.

A couple of months ago, we wrote about Analyzing Midjourney data (link). We are reusing that same data. Interestingly, we found that the most power users averaged the prompt length to be ~25 words. So, we simply selected 3,000 prompts around 25 words long and generated by power users. You can find the selected prompts here (link). The quick, random test gave some decent results, so we assumed the dataset was good enough.

Creating short, poorly written prompts

Now that we had 3,000 supposedly high-quality prompts, we had to create poor-quality versions of those. We can’t do that by hand, so here is when the GPT comes in for the first time.

We know that GPT can do summarization well, and more generally, turning a good prompt into a bad one is much easier than the other way around. ChatGPT showed it could do the task, but we cannot do it individually in that chat interface. That’s why we had to leverage GPT API to do that via script.

We used this notebook to turn the prompts into shorter versions (link). In essence, you have to provide your OpenAI API key, give the model system prompt to explain what’s up, and then feed in high-quality prompts one by one. Here is the system prompt we used for the task:

I'll send you a prompt that's too wordy. You will summarize it in a short for 5-7 words, capture subject, composition, verb first and maybe add a few adjectives. Capture intent precisely. Example output from you: A girl in paris, modern art. Reply only with the new short prompt

Our script was horrible at it for some reason, getting stuck all the time, so we ended up slowing it down quite a bit and doing the whole task in small batches. This part needs a rewrite, but we had our combos of good and bad prompts one way or another.

Combining Dataset into OpenAI format

Before we could start fine-tuning, we had to combine everything into an OpenAI-specific format. You can read about that here (link).

Now, the important and new part here is that the dataset will need to have three parts: user content (short, bad prompt), assistant content (reply from GPT with the high-quality prompt), and system content that will tell GPT that it now needs to do the opposite and turn bad prompts into good ones. So here is the new system message we crafted.

You are autoregressive language model that works at followfox.ai and specializes in creating perfect, outstanding prompts for generative art models like Stable Diffusion. Your job is to take user ideas, capture ALL main parts, and turn into amazing prompts. You have to capture everything from the user's prompt and then use your talent to make it amazing. You are a master of art styles, terminology, pop culture, and photography across the globe. Respond only with the new prompt.

After the training, we realized that some optimization could be done here to make it shorter and crisper. Here is the full code for data prep.

system_content = "You are autoregressive language model that works at followfox.ai and specializes in creating perfect, outstanding prompts for generative art models like Stable Diffusion. Your job is to take user ideas, capture ALL main parts, and turn into amazing prompts. You have to capture everything from the user's prompt and then use your talent to make it amazing. You are a master of art styles, terminology, pop culture, and photography across the globe. Respond only with the new prompt."

formatted_data = []

for _, row in combined_df.iterrows():

entry = {

"messages": [

{"role": "system", "content": system_content},

{"role": "user", "content": row['short_version']},

{"role": "assistant", "content": row['prompt']}

]

}

formatted_data.append(entry)And you can find our ready-to-train dataset right here (link). Note that we ended up with close to 2k examples, which is on the high end of the data size typically used in GPT tuning according to the guides we consumed.

Fine-tuning Process

The process was surprisingly easy and took ~10 lines of code. You will first upload the dataset we prepared, get an ID of that uploaded file from OpenAI, and feed it to the training launch command. See this notebook (link).

Once launched, you’ll see the job and its progress on OpenAI’s dashboard (link).

In our case, the job was completed in about 1 hour and cost $7.19. The price goes up quite a bit with the dataset size and system_content length, so be mindful of that. You can do the quality fine-tune for much cheaper or exceed this amount.

Using Fine-tuned Prompt Helper

Once the model is fine-tuned, you will need a model name to use in your code. In this case, it was this one; feel free to test it yourself.

ft:gpt-3.5-turbo-0613:followfox-ai::85z5rcYCTo query it we wrote a simple code that you can find in this notebook (link). Note that you’ll need to enter your API key there.

Let’s take a few prompts that we got back from the input of “vacuum cleaner transformer.”:

As we can see, there is a huge variation in prompting style and quality. This is both good and bad in a way but we think overall can be a fun way to explore breadth of ideas when just starting to approach a specific subject.

Let’s look at the few (biased selection) of images.

Let’s take a few prompts that we got back from the input of “vacuum cleaner transformer.”:

- vacuum cleaner from the idw transformers universe 3d model

- transformers bumblebee megatron decepticon, vacuum cleaner, tracing,

- 20ft tall robot transforming into a vacuum cleaner

- vacuum cleaner as transformer for illustration for transformers comic in a animated style hong kong action cinema beautiful rendering realistic atmospheric soft focus bullit charlie in motion photography zoomburst

- the vacuum cleaner transform into a humanoid armored heroes with over the shoulder look, sleek, high detailed, from the front, straight front view, Transformer toy box illustration

As we can see, there is a huge variation in prompting style and quality. This is both good and bad in a way but we think overall can be a fun way to explore breadth of ideas when just starting to approach a specific subject.

Let’s look at the few (biased selection) of images.

One of the prompts caught our eye, so we sent it back to the prompt helper: “20ft tall robot transforming into a vacuum cleaner”.

And here are the first three responses from that request:

And some of the images that we got with Distillery using these prompts.

And here are the first three responses from that request:

- A robot, which is an apartment building cleaner and a great guru of cleaning 20ft tall was a vacuum cleaner

- a realistic photograph of a 20ft tall Transformer robot wearing an apron and holding a duster, flower hat and blue cleaning gloves.

- a robot with a tube is always vacuuming the ground every few feet, a man walking out of a room with a desk, futuristic, 20ft tall

And some of the images that we got with Distillery using these prompts.

To finish our exploration, we added a few LoRAs and slightly modified the prompt:

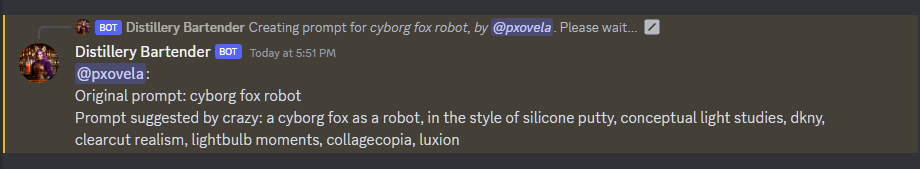

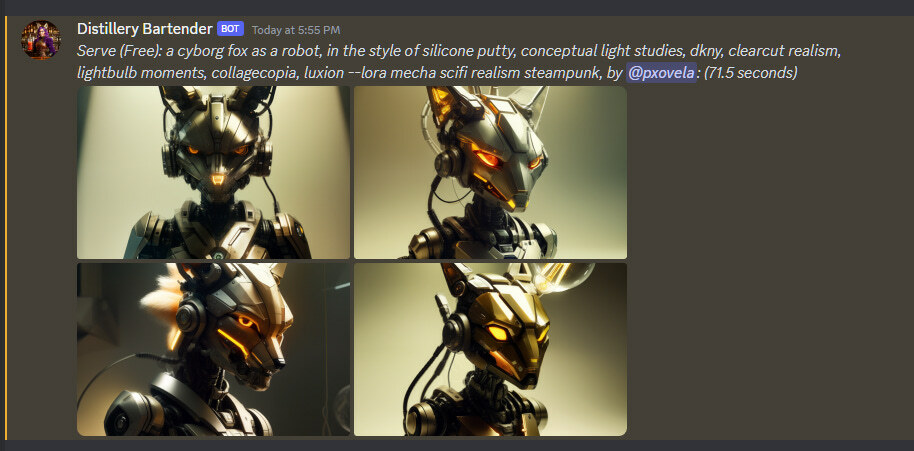

Prompt Helper in Distillery

Overall, we found this fine-tuned model to be fun to play with. So, we are adding it as a test feature to our Discord server so that every user can try it themselves.

There are two main options to use the feature.

The first one is text only. Simply type /suggest a command followed by your simple text prompt and receive an answer from our fine-tuned GPT model. You can do this multiple times to generate a few fun prompts, each usually quite different.

The second option is to use the feature as a parameter during the image generation. simply add --llm crazy to your image prompt. (We called this one crazy because it acts as such.

In the meantime, we are working on an updated version that ideally should be more consistent after tuning a few things we have mentioned in this post.