Hello everyone,

Last week we tried to implement the idea of finding an optimal learning rate range for a given dataset for the fine-tuning based on the ideas from this paper (Cyclical Learning Rates for Training Neural Networks). You can see that post here (link).

We mentioned that the paper discussed two main ideas: how to find the learning range and more importantly the idea of cycling through this found learning rate range throughout the training to achieve superior results or to achieve the optimal results much faster.

This time we will try to implement that idea of cycling the learning rate and see how it does on our tests.

Last week we tried to implement the idea of finding an optimal learning rate range for a given dataset for the fine-tuning based on the ideas from this paper (Cyclical Learning Rates for Training Neural Networks). You can see that post here (link).

We mentioned that the paper discussed two main ideas: how to find the learning range and more importantly the idea of cycling through this found learning rate range throughout the training to achieve superior results or to achieve the optimal results much faster.

This time we will try to implement that idea of cycling the learning rate and see how it does on our tests.

Experiment Design

The basic idea is to get a range of learning rates and cycle through those values throughout the training. The paper proposes that doing so linearly is the simplest method of accomplishing this but also mentions that other approaches were also tested to achieve similar results.

For determining for how exactly to cycle through these rates the paper proposes the idea of stepsize, which is defined as the number of steps the training will take to go from one end of the learning range to the other end (either up or down). So one cycle will be equal to stepsize *2.

The proposed stepsize length is to use 2 to 10 times the epochsize (the number of steps per each epoch). We find this part a bit odd to be tied to the epochsize for the fine-tuning approaches since the epochs differ a lot based on the size of fine-tuning and often we see a much smaller number of epochs needed on large datasets and orders of magnitude more epochs on the smaller ones. In the latter case, this would mean an insane number of cycles. Potentially we might benefit a bit more from having a target number of total cycles instead of a stepsize defined like this.

To start, we decided to follow the paper’s advice and test stepsize with the proposed 2 to 10 multiplier.

Initial Tests

The first goal was to get the cyclical learning rate idea working for fine-tuning purposes. From the last experiment, for Damon’s dataset an upper bound of 1.13E-06 and since we observed learning to be starting from the very low LR values, we will use 0 as the lower bound.

In that dataset, we had 20 images and we are using 20% for validation runs, leaving us with 16 images. With a batch size of 4, we have an epoch size of 4 steps (16/4=4). We decided to start with the 10 multiplied, so our stepsize would be 10*4=40 steps. Meaning that it will take 40 steps to go from a zero learning rate to the target of 1.13E-06 and then another 40 steps to go back down. For the first run, we let it run for 200 epochs to observe what happens.

To implement all of this, we changed the following parameters in ED2 trainer:

- "lr": 1.13e-06,

- "lr_decay_steps": 40,

- "lr_scheduler": "cosine"

and as a result, we indeed got our cycles going! Let’s look at the learning rate graph:

As expected, we had a total of 800 steps (200 epochs of 4 steps per epoch), and we saw a total of 10 cycles, each cycle taking 80 steps, 40 to go up and 40 to go down. Now let’s take a look at our validation loss graph of this run:

As we can see, it reached the minimum at around 400 steps, stayed somewhat stable but flat for another 200, and then started going up. It’s more insightful if we compare this to the loss graph of a run with a constant learning rate at the midpoint of the range.

Interestingly, there is no significant performance gain that’s clearly visible, both graphs follow a very similar trend in loss with a bit of difference in the slope of loss change depending on where the learning rate was in the cycle.

Next, we tested the stepsize with the multiplier of two, so 8 stepsize, 16 steps per cycle, and in this case, a total of 800/16=50 cycles.

Next, we tested the stepsize with the multiplier of two, so 8 stepsize, 16 steps per cycle, and in this case, a total of 800/16=50 cycles.

In this case, we observe a loss graph following the original constant graph even closer, potentially highlighting the shortfall of the 2 to 10 rule for datasets that have small steps per epoch and require a high epoch count for the training.

In all the cases we saw that the loss values achieved a minimum at ~400 steps.

Final Tests and Outputs

For the final run, we decided to disable validation and get the 20% of the dataset back. So our epoch size increased to 5. The paper proposes to end the training at the lowest learning rate point, this can be achieved by running a half cycle toward the end. So decided to run a total of 5.5 cycles, ie 550 steps total. We set our stepsize to be 50, which means 20 epochs per cycle so we did 110 epochs total.

Additionally, the paper proposes to gradually decrease the learning rate after 3-5 cycles so we did another run where we run 3 cycles at 1.13E-06, 2 cycles at 5.65e-7, and finally 1.5 cycles at 2.83e-7 to end the run on that low point. These are the learning rate graphs for those two runs.

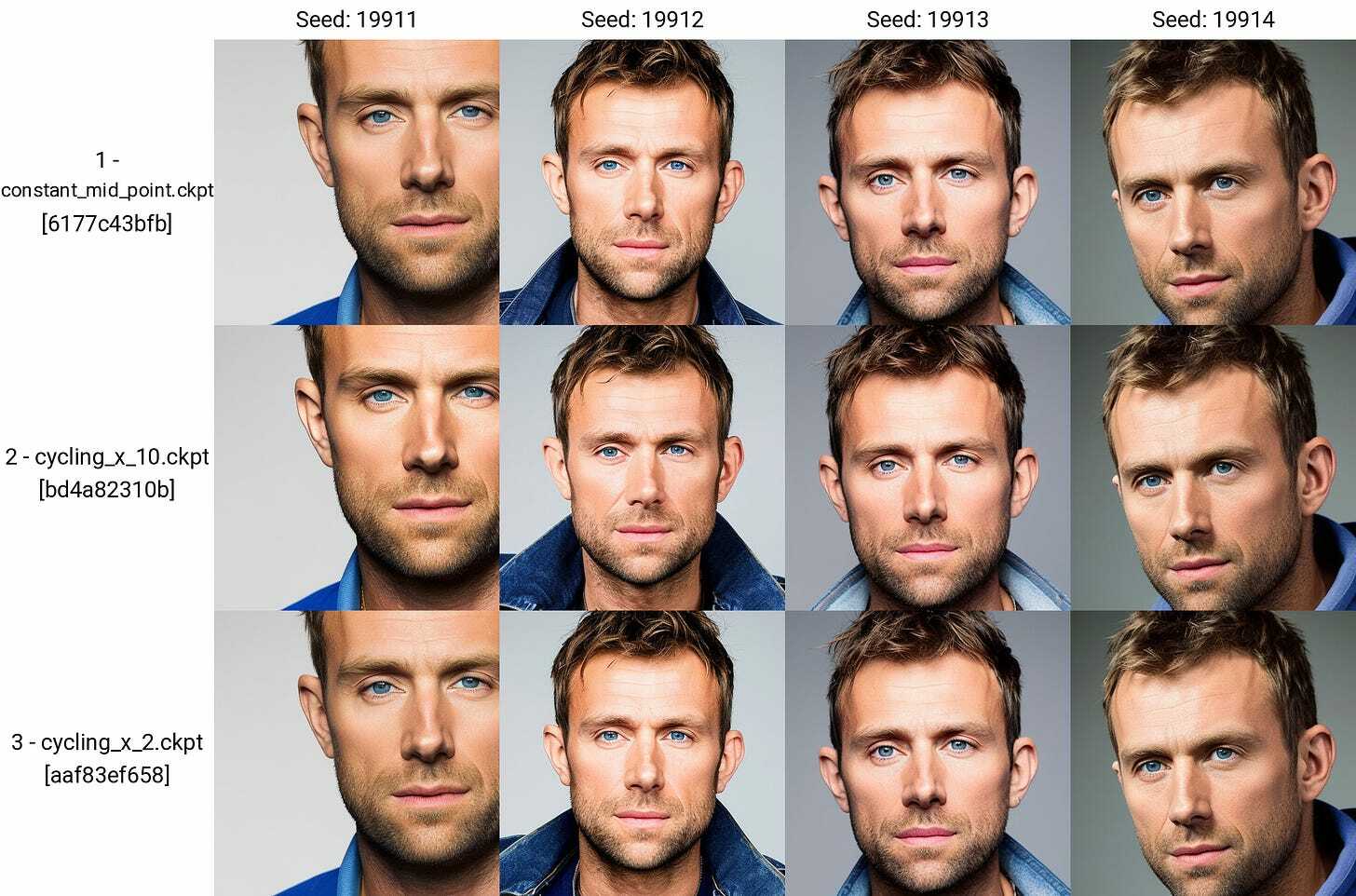

To compare images, we used a baseline with a checkpoint that was trained on the mid-point learning rate with a constant scheduler (like we did in the previous post).

For realistic images, we got results that look very close. Very subjective but cycling_x10 looks marginally better. However, they all feel undertrained when compared to our older models that were not following the learning rate discovery and cycling method. Here is an example from this post (link).

Let’s take a look at other examples as well:

In these examples, it’s even harder to say if one of the three models were superior.

Conclusion

By following the proposed methodology on this particular dataset we didn’t manage to achieve superior results. However, the approach is very doable with the tools we have and at the very least is worth the try. We suspect that this approach might be more interesting in the case of a larger dataset.

Additionally, since our models feel under-trained compared to the ones where we like the output more, we have a few working hypotheses to test in the future. First of all, it might be a matter of taste and we just like the look of “fried” models that in the loss graphs just show as suboptimal. But more importantly, we suspect that the learning rate discovery method might need some modifications for smaller datasets that train quickly and that the true upper boundary is somewhat higher.